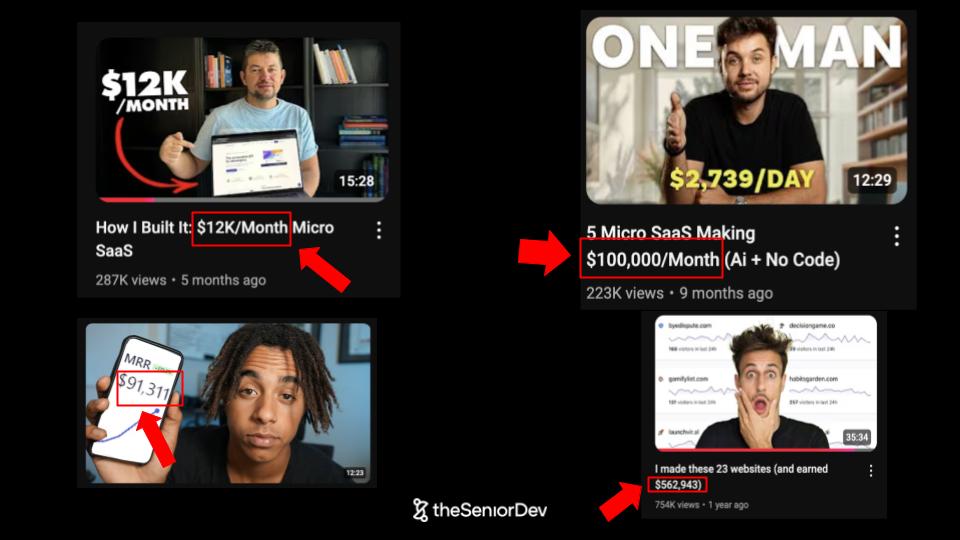

Why I Stopped Using AI as a Senior Developer (After 150,000 Lines of AI-Generated Code)

In the last 3 years, I used AI for coding almost every day.

Judging by my commits, I must have written about 150Ks lines of code with it.

I used pretty much every AI tool and model out there – from GitHub Copilot, ChatGPT, Claude, Cursor, Windsurf… yes, even DeepSeek and Gemini.

At first, it felt like magic.

(you can also watch this article as a video on YouTube, below)

Boy, I was just flying through the code. Shipping MVP in 1/10th of the time. I achieved in 1 week what a full team of developers used to deliver in over a month.

But than, something happened…

Three months ago, I was working on a feature for a huge production application.

I got a bit lazy and said…

Hey, let me “vibe-code” my way into it. I basically asked Windsurf to write the feature for me.

I literally pasted the requirements in and hit ‘Enter’.

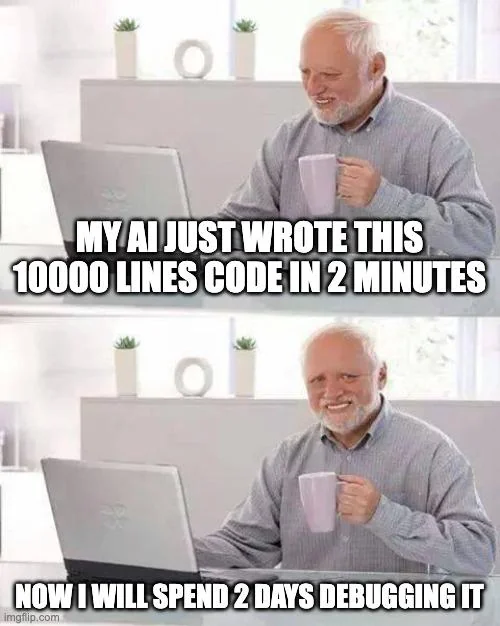

After 15-miutes of waiting and Windsurf randomly changing 7+ files… The only thing it achieved was to add a new bug. And so I said… You know what… Fix the bug.

Windsurf went into another 10-min loop. Did the same.

And so that cycle kept repeating for about 2 hours.

So I decide to do it myself.

And that’s when I realized how bad things were: I had to literally delete the entire code, do a ‘git reset’ and start again from scratch.

The worst part?

This was not the first time I had to delete big chunks of code and start from scratch after using AI.

It usually starts like this…

You prompt the AI. It adds some code.

Than more code. It modifies files. Than more file. Then it creates a bug. Tries to fix it. Fixes, but breaks something else. And so it loops itself to the infinite and beyond.

What you end up with is a huge pile of spaghetti code.

And guess who’s going to have to fix that?

You.

Yes, you.

You are going to have to dig in, read through 100s of lines of AI-generated code and fix them.

Most of the time, it is faster to just start over instead of correcting hundreds of lines of messy AI-generated code.

What I do now is, I start from scratch myself. And only after I use AI to close the gap.

I realized what might take you 10 seconds to fix by yourself.

Can take you 10 minutes when using AI.

That was my “AHA” moment.

Because when I took a closer look at the codebase I’ve been working on with I, I found out…

- Tons of duplicated code:

- There was basically no component re-usability

- When I found a re-usable component, it would be over-engineered

- Dead code everywhere (I am talking dozens of useless files):

- Useless files from previous commits that haven’t been clean up

- Redundant implementations - for example, instead of using ‘lodash.debounce’ - the AI would just code it’s out debounce library (reinventing the wheel).

- AI forgot that in general it is better to use as little code as possible - and if you have an external library that’s already been tested and maintained by other developers - it is a lot easier to just use that.

- When you add your own implementation for things that exist, you also have to add your own tests, own documentation, own interfaces. You are wasting time developing stuff yourself that’s already out there.

- Side effects:

- Sometimes I would tell the AI to fix the TypeScript types, and it’ll go on and change something in a totally unrelated part of the codebase.

- Useless “Tests:

- Yes, AI wrote Unit Tests that didn’t assert anything meaningful

- A lot of test cases were redundant (feel like a different test, but you are actually testing the same “happy” path)

- Lack of consistency and coherence:

- Yes, I did add style guides, and config files

- Actually, the more style guides you use, the worst the results you get

- Overengineered and bloated codebase:

- We had our own custom hooks when we didn’t actually need them

- Same on the backend, it kept adding useless middleware

- Massive technical debt:

- I estimate around 60% of the codebase had to be refactored and cleaned up (which is what I’m doing right now)

🚨 P.S. Are you a JavaScript Dev interviewing right now? Here are the Top 25 Most Asked Technical Interview Questions for 2025.

To sum it up…

I stopped using AI because I just got sick and tired of it.

At some point, I decided I had enough of:

- Waiting 15 minutes for a model to come up with a buggy solution.

- Passing tests even if features had obvious bugs.

- The “pump and dump” of AI models (ooh wait AGI is around the corner) - it really feels like what happened with JavaScript frameworks 5 years ago - every week a new one comes up which is so much better than the previous one.

- Reviewing 300+ lines of code for things that could be done in 3!

- Constantly having to specify obvious details (config files didn't fix this).

- Not really knowing what the heck is going on in my codebase (used D3 to build a graph and I didn’t want to deep dive into it - the result was a disaster, and what’s worse - a disaster I could not fix - cause’ I had no clue about D3).

- My codebase became a dumpster of spaghetti AI generated code - if you don’t make the effort to write code - you most likely most make the effort to read it - so you just keep accepting suggestions until something breaks.

But, the worst thing about relying on AI to write most of my code:

I slowly watched my technical skills get worse… All while I was playing with ChatGPT like a with slot machine.

You prompt AI. Hope to get lucky. You don’t. Then you prompt again.

Watch out, because this kind of dopamine loop is what hooks people up the most.

So, in this article I will cover:

- The 3 lessons I learned from 3 years of AI-coding

- How much AI I used today as a Senior Dev (if any)

- The BIG mistake I see Junior devs making with AI (point of no return)

Lesson #1: To Do The BIG Stuff, You Have To Excel At The Small Stuff.

The idea that AI will do the repetitive tasks while you focus on System Design and Architecture is at best a myth.

If you want to be able to do the BIG stuff… The architecture, the Micro-frontends, than you have to be able to first excel at the small stuff.

Excel at CSS and JavaScript first, if you want to do frontend architecture.

You can’t dream about doing big stuff if you don’t master the fundamentals first.

You can’t build a frontend application if you don't deeply understand CSS (the box model, flex, border, shadows).

When you don’t know how to implement things… You don’t know what to ask for… You don’t know what the possibilities are with the technology you are using.

The reality is what they call “AI” cannot really think.

Those are LLMs that use the knowledge they’ve been trained on to come up with an answer. But they cannot reason anything from the bottom up.

Lesson #2: When You Write Production Software, Less Is More

You probably know the story of BlackBerry, one of the first smartphone companies… There were completely crushed by the iPhone.

One of the big differences between both was that the Blackberry has so many buttons. While the iPhone only had one.

Less is more in product development.

Sophisticated design is actually simple. It is a rule of nature in technology. Senior engineers come up with simpler solutions than Junior engineers.

First versions of anything are more complex than final ones.

Remember:

No single software company ever died because of lack of code…

Or not enough features. Actually, the opposite is true - software companies dies when they bloat their software with useless features and forget to focus on what actually brings value (SAP, Salesforce, Oracle…).

More code actually means less productivity.

More technical debt, longer code reviews, lower quality, longer builds, and more useless features. Writing code was never the bottleneck when writing software.

Great software engineers synthesize and simplify.

Bad engineers write tons of redundant code. Simplicity is the value of great software and great engineering.

Lesson #3: It Is Easier To Just Do The Thing. Then To Explain Word By Word How To Do It.

This might undermine the whole concept of ChatGPT, but:

- Describing problems, with the level of detail LLMs need, takes more work than actually solving the problem.

- That’s because LLM’s lack an intuitive understanding of the world, so they need to be fed tons and tons of context (aka tokens).

- Even so, LLMs are not actually able to do logical problem-solving, but more pattern matching, very imprecise and flaky (non-deterministic).

- Adding style configs or guidelines for the AI will only make the queries more unstable - the probability of hallucination grows bigger, the more context we provide. People believe the more context you give to the AI, the better the solution will be. But, the reality is totally different. The more context you give it, the more code, the more guides, the worse. Because then it can pick any data you fed it and hallucinate with it. More context can make things worse.

- If you are constantly trying to predict the next token, you might not see the obvious solution in front of you (you can’t see the forest from the trees).

But hey, even if I told you all this…

You will still have people at work telling you (and almost pushing you) to use it.

Sadly, not using AI these days as a software engineer might get you fired. So, what should you do?

Look, you need to use it.

Work with it, and even pretend you like it.

Because sometimes you just have to dance along with the music being played.

The best you can do, is to use it… And at the same time stay sharp and be aware of its limitations.

Finally, this is how I use AI today:

- Noise v.s. Signal: We are seeing devs “forced” to use AI by the management because they think it is the next big thing (and if not, they are going to miss out). The same happened a few years ago with Big Data, Blockchain, Mobile Apps and whatever was trending. Yes, you have to ride the wave. But don’t get sucked into it, either.

- Inference: AI is great at creating a second, third and so on version of something, give you already built a version one. You can create test mocks, test cases, classes and interfaces pretty easily. I use Windsurf for that on a daily basis (be aware, it will still make mistakes even in that use case).

- Documentation Scrapping: It can also be very useful when documentation is poor. So when you find a package where the docs are poorly structured or incomplete, AI can synthesize that and help you out a lot.

- Autocomplete: Pretty useful when used well. But pleaser review every line of code AI wrote before you accept it. The simpler the problem, the better AI will be at finding an acceptable solution.

Okay folks, this is it for this article.

Bogdan and I are putting together another article about “Best practices for AI-assisted coding”, stay tuned if you want to be the first to get it.

For now, we will still be using AI here and there, and documenting our lessons on our YouTube Chanel and here on this blog.

Till’ the next one,

Dragos

🚀 JavaScript Devs: We’ll Get You To Senior. OR You Don’t pay. See how it works here.

.webp)

Find Your Technical Gaps With This FREE 10-Minutes Technical Assessment

Take The FREE Technical AssessementRecommended Articles

-min.webp)

.webp)

.png)

-4-min.webp)